Abstract

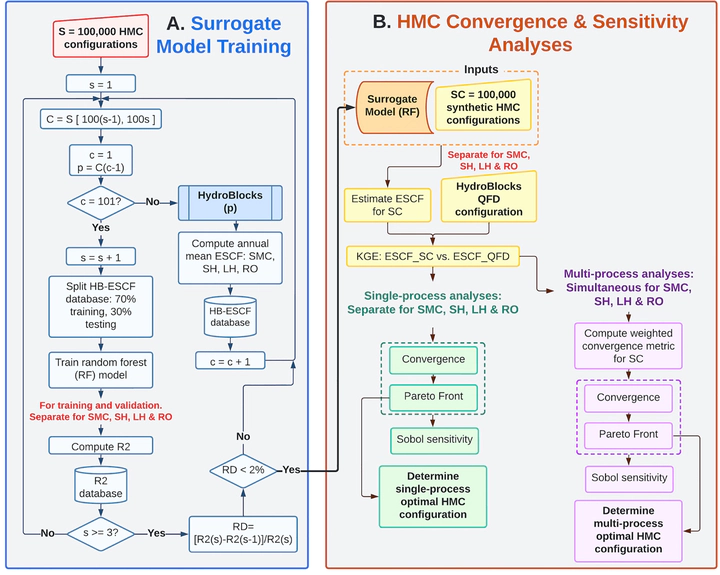

One of the persistent challenges of Land Surface Models (LSMs) is to determine a realistic yet efficient sub-grid representation of heterogeneous landscapes. This is particularly important in emulating the fine-scale and nonlinear interactions between water, energy, and biogeochemical fluxes at the land surface. In LSMs, landscape heterogeneity can be represented using sub-grid tiling techniques, which partition macroscale grid cells (e.g., 1°) into smaller units or “tiles.” However, there is currently no formal procedure to define the number of tiles required to adequately represent the heterogeneity of hydrologic processes within a macroscale grid cell, and across spatial scales. To address these challenges, a new approach is presented to diagnose sub-grid process heterogeneity formally and to infer an optimal number of tiles per macroscale grid cell. The approach is demonstrated using the HydroBlocks modeling framework coupled to Noah-MP LSM implemented over a 1.0-degree domain in Western Colorado in the United States. Our results show that (a) a surrogate model can accurately infer the spatial structure of the LSM’s time-averaged hydrological fields, with over 95% overall R2 performance in the validation stage; (b) the optimal configurations for a target level of complexity can be determined using a multi-objective Pareto efficiency analysis, which includes the simultaneous representation of the multi-scale heterogeneity of several processes; (c) the use of ∼100 tiles effectively reproduces a quasi-fully distributed LSM setup (i.e., 83,000 tiles) with approximately 1% of the computational expense. This method provides a path forward to efficiently determine the optimal tile configurations for LSMs while simultaneously considering the spatial heterogeneity and spatial accuracy of hydrologic processes.